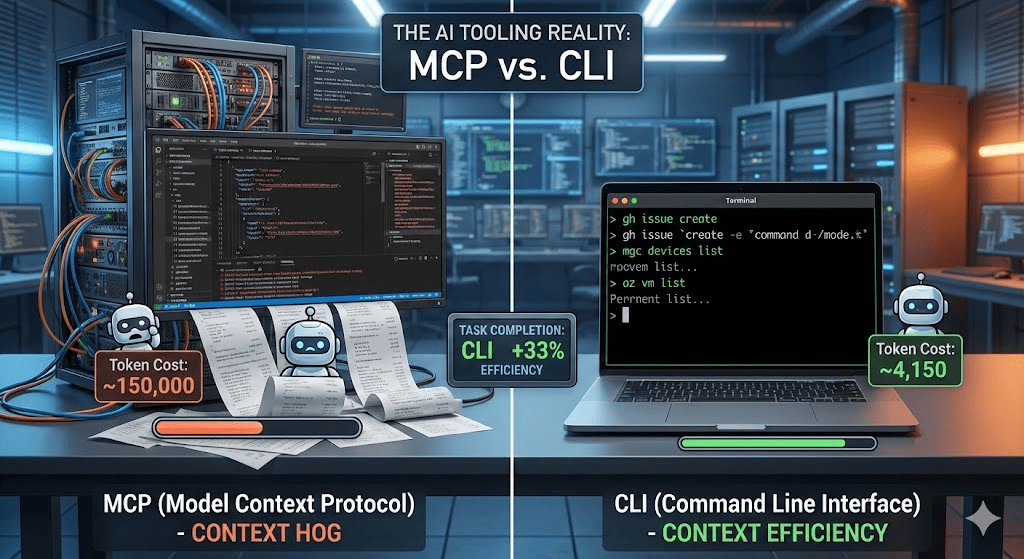

Let’s be honest: MCP (Model Context Protocol) was supposed to be the universal connector between AI models and the real world. A clean, structured protocol that lets your AI agent talk to any tool through a standardized interface. Sounds great in theory. In practice? I’m increasingly reaching for good old CLI tools instead — and I’m not alone.

After months of building AI agent solutions and working with both approaches in real-world enterprise scenarios, here’s my take: CLI tools are the better choice in many cases, and the reason is surprisingly simple — context efficiency.