We’ve all built PowerShell scripts to query Intune, wrapped them in some automation, and called it a day. It works. But with Azure OpenAI Service and models like GPT-4.1 and GPT-5.2 optimized for tool calling, there’s a more interesting approach—building an actual AI agent that can talk to your Intune environment.

Instead of writing a script for every query, you build one agent that understands natural language and calls the Graph API on your behalf. Ask it “which Windows devices are non-compliant?” and it figures out the right API call, executes it, and summarizes the results. It’s not magic—it’s function calling with a nice interface.

In this post, I’ll walk you through two different approaches to building this agent: the classic direct SDK approach and the newer Microsoft Agent Framework. Both use the same underlying Graph API client, but differ in how they orchestrate the AI. Let’s dive in.

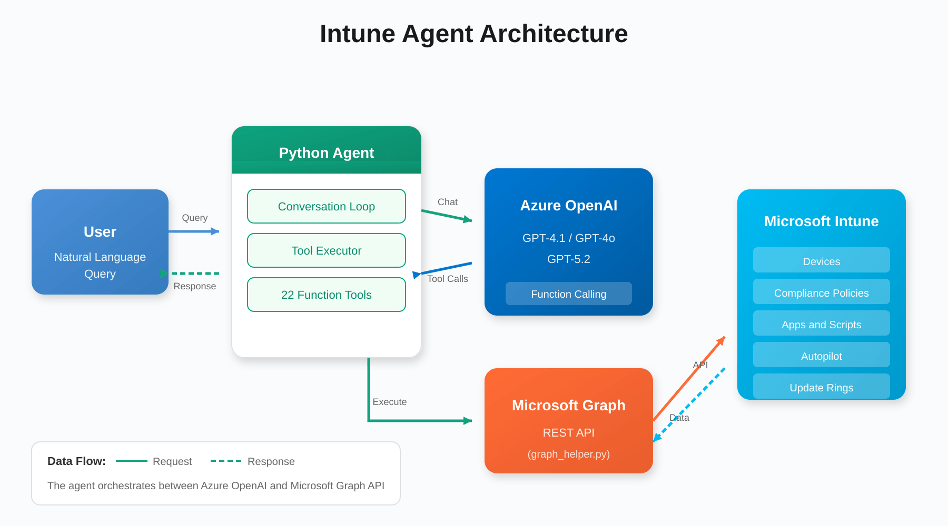

Architecture Overview

Before diving into the code, let’s understand how all the pieces fit together:

Figure 1: High-level architecture of the Intune AI Agent

The agent acts as an orchestrator between the user, Azure OpenAI, and Microsoft Graph. When you ask a question, the Python agent sends it to Microsoft Foundary / Azure Open AI, which decides which tools to call. The agent executes those tools via the Graph API and feeds the results back to the AI for interpretation.

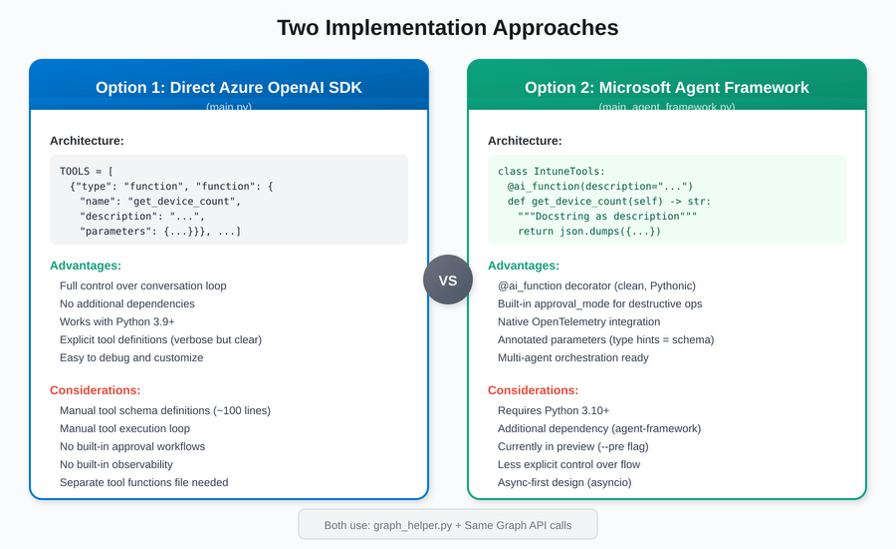

Two Implementation Approaches

This project provides two ways to build the same agent. Both share the same graph_helper.py for Graph API calls, but differ in how they integrate with Azure OpenAI:

Figure 2: Direct SDK vs. Microsoft Agent Framework

| Aspect | Direct SDK (main.py) | Agent Framework |

| Python Version | 3.9+ | 3.10+ required |

| Tool Definition | Manual JSON schema | @ai_function decorator |

| Parameters | Explicit JSON schema | Annotated[type, “desc”] |

| Approval Flow | Manual (in system prompt) | Built-in approval_mode |

| Observability | Custom logging | Native OpenTelemetry |

| Execution Model | Synchronous | Async (asyncio) |

| Maturity | Production-ready | Preview (–pre) |

Which should you choose? Use the Direct SDK approach if you need production stability today or are on Python 3.9. Use the Agent Framework if you want cleaner code, built-in approval workflows, and are okay with preview software.

What is Microsoft Foundry?

If you’ve been following Azure AI Studio, you might have noticed the rebrand. At Ignite 2024, Microsoft introduced Azure AI Foundry Agent Service as a public preview, and by May 2025 it went GA with over 10,000 customers already using it. The platform has since evolved into Microsoft Foundry—a unified experience for building, deploying, and managing AI agents with enterprise-grade security.

The key pieces that matter for us:

- Foundry Agent Service—The runtime that hosts your agents, handles conversations, orchestrates tool calls, and manages state

- Foundry Models—Access to GPT-5.2, GPT-4.1, Claude, and 11,000+ other models through one endpoint

- Microsoft Agent Framework—The unified SDK (successor to Semantic Kernel + AutoGen) for building agents locally

- Function Calling—Your agent can invoke custom tools—like the Microsoft Graph API

GPT-4.1 vs GPT-5.2: Which Model Should You Use?

Both models are available in Azure OpenAI Service and optimized for different scenarios.

| Aspect | GPT-4.1 | GPT-5.2 |

| Context | 1M tokens input | 272K tokens |

| Latency | Fast—optimized for real-time | Slower—deep reasoning takes time |

| Tool Calling | Excellent for coding/tools | Full tool support, raw payloads |

| Best For | Quick queries, real-time chat | Complex analysis, agentic workflows |

How the Conversation Flow Works

The magic happens in the tool-calling loop. Here’s the step-by-step flow when you ask the agent a question:

Figure 3: The tool-calling loop that powers the agent

The key insight is that steps 3-6 can repeat multiple times. If your question requires data from multiple sources, the AI might call multiple tools in sequence, combining the results to give you a complete answer.

Approach 1: Direct Azure OpenAI SDK

This is the classic approach using the openai Python package directly. You define tools as JSON schemas and manage the conversation loop yourself.

Tool Definition (main.py)

Tools are defined as a list of JSON objects with explicit schemas:

TOOLS = [

{"type": "function", "function": {

"name": "get_devices_by_os",

"description": "Filter devices by operating system",

"parameters": {

"type": "object",

"properties": {

"operating_system": {

"type": "string",

"description": "OS: Windows, iOS, Android, macOS"

}

},

"required": ["operating_system"]

}

}},

// ... 21 more tool definitions

]The Conversation Loop

You manually handle the tool execution loop:

response = client.chat.completions.create(

model=model,

messages=messages,

tools=TOOLS,

tool_choice="auto"

)

# Handle tool calls in a loop

while assistant_message.tool_calls:

for tool_call in assistant_message.tool_calls:

result = execute_tool(

tool_call.function.name,

json.loads(tool_call.function.arguments)

)

messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"content": result

})

# Get next response

response = client.chat.completions.create(...)

assistant_message = response.choices[0].messageApproach 2: Microsoft Agent Framework

The Microsoft Agent Framework (successor to Semantic Kernel + AutoGen) provides a cleaner, more Pythonic way to define tools using decorators and type hints.

Tool Definition with @ai_function

Tools are defined as methods with the @ai_function decorator:

from agent_framework import ai_function

from typing import Annotated

class IntuneTools:

@ai_function(description="Filter devices by operating system")

def get_devices_by_os(

self,

operating_system: Annotated[str, "OS: Windows, iOS, Android, macOS"]

) -> str:

"""Filter devices by operating system."""

result = self.client.get_devices(

f"operatingSystem eq '{operating_system}'"

)

return json.dumps({'count': len(result), 'devices': result})Notice how the parameter description comes from the Annotated type hint—no separate JSON schema needed!

Built-in Approval Mode for Destructive Actions

One killer feature is built-in approval workflows:

@ai_function(

description="Retire a device from Intune. USE WITH CAUTION!",

approval_mode="always_require" # Built-in human-in-the-loop!

)

def retire_device(

self,

device_id: Annotated[str, "The device ID to retire"]

) -> str:

result = self.client.retire_device(device_id)

return json.dumps(result)With approval_mode=”always_require”, the framework automatically pauses before executing destructive operations and asks for user confirmation.

Simplified Agent Creation

The agent creation and execution is much cleaner:

from agent_framework.azure import AzureOpenAIChatClient

client = AzureOpenAIChatClient(

endpoint=os.environ['AZURE_OPENAI_ENDPOINT'],

credential=credential,

api_version="2024-10-21"

)

agent = client.create_agent(

name="IntuneAgent",

instructions=SYSTEM_PROMPT,

model=os.environ['MODEL_DEPLOYMENT_NAME']

)

# Run with all tools - framework handles the loop!

result = await agent.run(

user_input,

tools=intune_tools.get_all_tools()

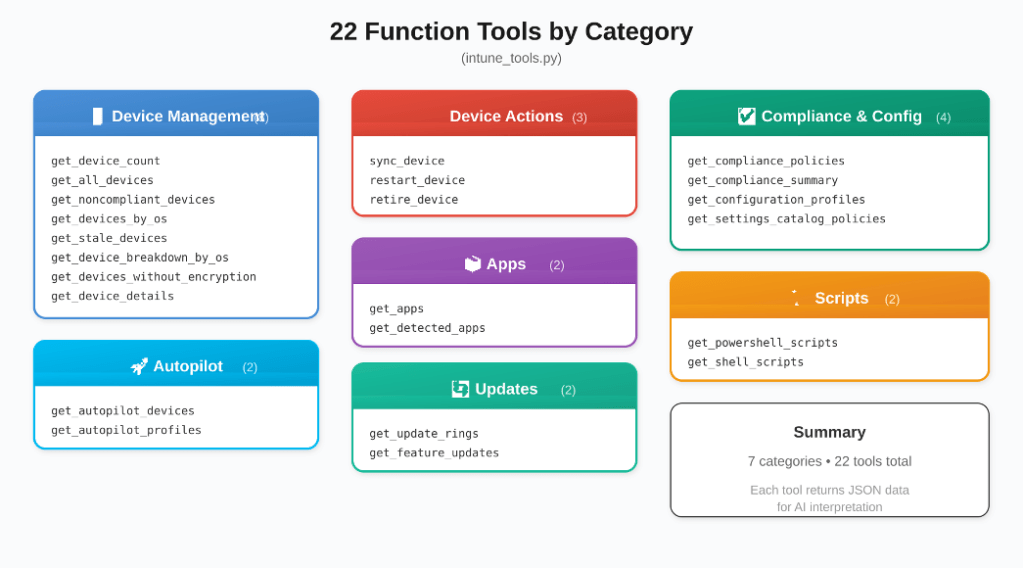

)Available Tools (Both Approaches)

Both implementations provide the same 22 tools across 7 categories:

Figure 4: All 22 function tools organized by category

Quick Start

Both approaches share the same setup:

# Clone and setup

https://github.com/JayRHa/IntuneAgent

cd IntuneAgent

python -m venv venv

source venv/bin/activate

# Install dependencies

pip install -r requirements.txt

# Configure Azure (creates app registration automatically)

az login

./setup.sh

# Run your preferred approach

python main.py # Direct SDK

python main_agent_framework.py # Agent FrameworkWhat Can You Actually Do With This?

- “Show me all non-compliant devices”—Instant compliance dashboard

- “Which Windows devices haven’t synced in 48 hours?”—Troubleshooting stale devices

- “Break down our fleet by OS”—Quick inventory analysis

- “Find devices without disk encryption”—Security auditing

- “Sync all Windows devices”—Batch operations

- “Show me Autopilot deployment status”—Deployment tracking

The Bottom Line

Building an AI agent for Intune isn’t science fiction anymore. With Azure OpenAI Service and the Graph API, you can create something genuinely useful in an afternoon. The hardest part is honestly just setting up the app registration permissions.

The Direct SDK approach gives you full control and works today in production. The Agent Framework approach is cleaner and more powerful, with built-in approval workflows and observability—but it’s still in preview. Choose based on your needs.

The full code for both approaches is available on GitHub. If you build something cool with this, let me know—I’d love to see what people create.

Resources

Microsoft Agent Framework Overview

Agent Framework GitHub Repository

Microsoft Foundry Documentation

Azure OpenAI Service Models

Microsoft Graph API for Intune

GPT-4.1 Announcement

GPT-5.2 Announcement

The Github link to repo does not exist.

LikeLike

Thank you was private

LikeLike